This article explains how and why Forefront calculates standards proficiency to reflect student understanding.

Watch an on-demand webinar about Proficiency in Forefront here.

Understand Overall Score vs. Standards Proficiency

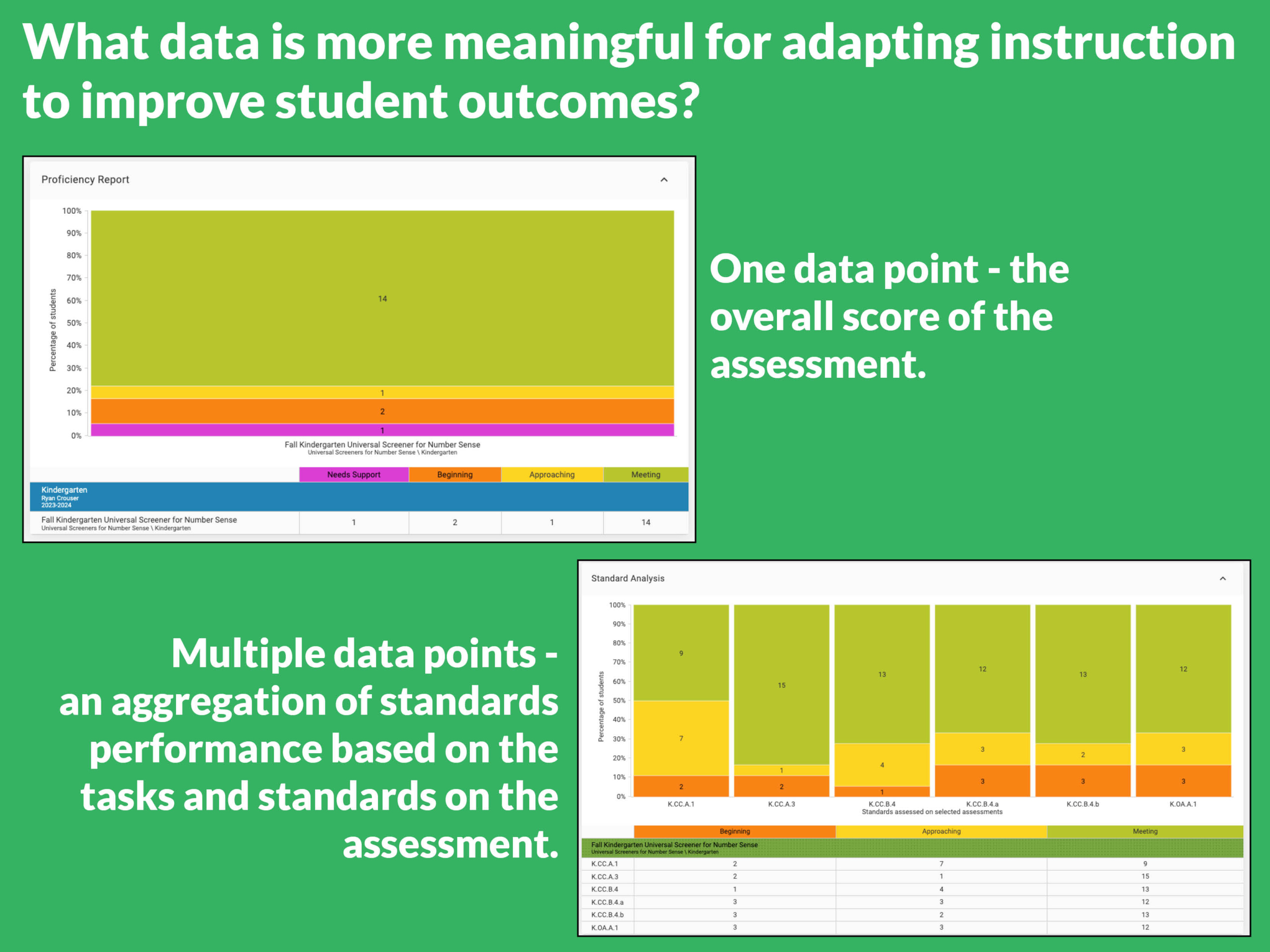

The DNA of Forefront is built for the ideas of standards-based assessment, grading, and reporting. Often, reports and visualization focus on standards proficiency instead of an overall. Progress toward proficiency, as it is visualized throughout the platform, uses standards as a focus for understanding and communicating about student performance. (Note that standards is a flexible term in Forefront -- standards can be learning targets, stages of a progression, or frameworks of a skill.)

While there are places in the Forefront where the overall is used and useful, often data in front of users is based on proficiency on a standard.

Standards Proficiency per Assessment

Assessments in Forefront are aligned to standards at the question level. For high-quality assessment, this is a perfect fit, as different questions assess evidence of student learning on different skills.

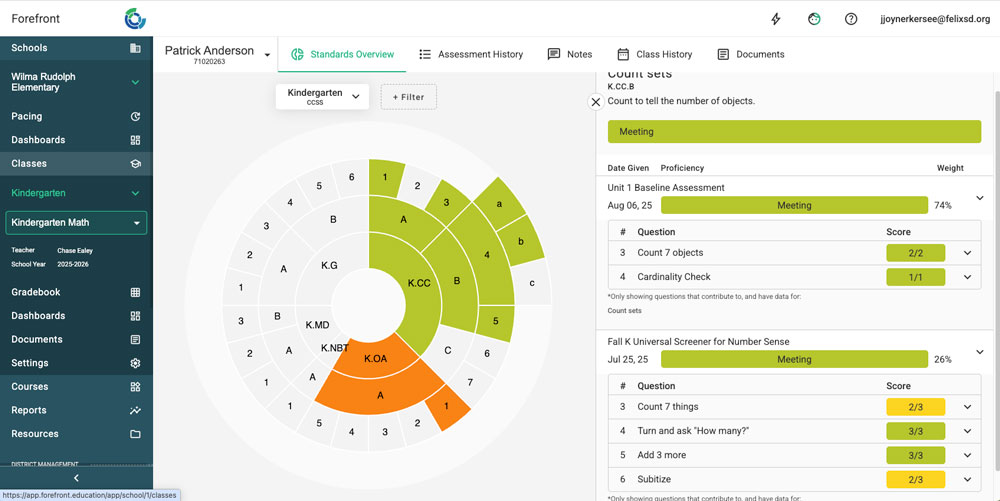

Below is a screenshot of the student wheel which displays a single student's performance relative to the domains (inner ring), clusters (middle ring) and standards (outer ring) of Kindergarten CCSS mathematics. Clicking any of the nodes of the wheel opens the body of evidence that contributes to the proficiency. Here, the body of evidence is focused on data on CCSS.K.CC.B. Two assessments have questions aligned to this cluster: 4 questions on the fall USNS and 2 questions on the Unit 1 Baseline Assessment.

Forefront aggregates the data into one data point that reflects the performance on the assessments all together by averaging the proficiency of each question/task per assessment, then looking over time but weighting more recent data more heavily.

How does this reflect student learning? In the example above, on the fall USNS, of the 4 questions aligned to K.CC.B, the student was proficient on two questions, and one level below proficient on the other two questions. This does average to proficient. Then, the Unit 1 Baseline, which is more recent, factors in more heavily. Note that point values are not reflected in the aggregation -- each question is an equivalent value.

What do the proficiency colors represent when the proficiencies are averaged?

Forefront rounds up.

- Well Below Basic (lowest level) is from 0 to below .5

- Below Basic is from .5 to below 1.5

- Basic is from 1.5 to 2.5

- Proficient is from above 2.5 to 3.5

- Advanced (many programs do not assess for and include) is above 3.5

Evidence Across the Wheel

Forefront considers all data for the standard and any of its children (standards lower in the standards structure) and applies the weighted average to that.

The Recency Weighting Algorithm

Forefront does not use a flat average -- instead, an algorithm makes it so that recent evidence matters most. A weighted average to assessment results weighs the most recent results the heaviest. How does this reflect student learning? Rooted in the best practices of standards-based learning, giving more recent data more power reflects the fact that students can change the level of their understanding through the learning process. With this, students are not "penalized" for earlier lack of understanding.

Troubleshooting tip: Because of this recency calculation, as content gets more rigorous through the year, students can demonstrate lower levels of proficiency later than they did earlier. This echos complexity around whether students are meeting current or end-of-year expectations. Many curricular assessments reflect current, moment-in-time expectations instead end of year proficiency on their expectations. This is because holding students to end-of-year expectations would be less nuanced data to inform and guide daily instruction and family communication.

To accomplish this, Forefront's proprietary algorithm utilizes the date of the assessment and applies an algorithm to define the dissipation of the influence of older results:

-

- The most recent evidence gets a full weight.

- Results that are 2 weeks older are weighted at approximately one third what the most recent information is weighted.

- Data that is 3 weeks old is weighted at approximately a quarter.

- Older evidence continues to become less and less influential on the overall representation of progress toward each standard.

Forefront's recency calculation utilizes the date of the assessment (as reported by the teacher) into its calculation. For example, if a student is assessed today for a particular standard that was previously assessed over two months ago, the new results would almost completely eclipse the old. However, if a student was assessed on a standard three days in a row, the three data points would be almost averaged, slightly weighting more the most recent data point.

Recommendations Around Excluding Data Points

Assessment data can be excluded from the proficiency calculation. Before doing so, be mindful about why this data is being excluded. In general, more data points build a more robust body of evidence. Pre-assessment data is helpful to see trends over time and the recency algorithm will honor more recent data more heavily which allows students a chance to show growth. Watch this quick video video to understand impacts of excluding assessments.

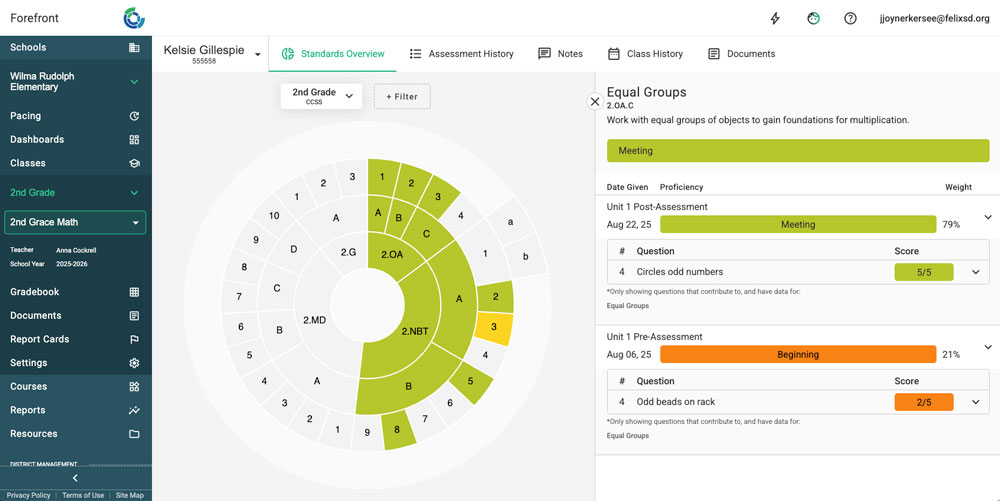

In the body of evidence below, see how an improvement in student performance on a standard from pre- to post-assessment is honored with the recency weighing.

Thinking about student evidence of learning in this way can take a mindset shift. Forefront's goal is to provide more meaningful data as teachers, districts, students, and families work with the idea that all students can demonstrate proficiency on critical skills.

Need more support?

Submit a support request or email our team at support@forefront.education.